A/B testing sample size calculation is crucial for businesses aiming to make data-driven decisions. Understanding the right sample size and timing can transform your marketing strategies. Read on to discover how to master these essential aspects of A/B testing.

I remember running my first A/B test after college. It wasn’t until then that I grasped the basics of determining a big enough A/B test sample size or running the test long enough to get statistically significant results.

But figuring out what “big enough” and “long enough” meant was not easy. Googling for answers didn’t help much, as the information often applied to ideal, theoretical scenarios rather than real-world marketing.

It turns out I wasn’t alone. Many of our customers also ask how to determine A/B testing sample size and time frame. So, I decided to do the research to help answer this question for all of us. In this post, I’ll share what I’ve learned to help you confidently determine the right sample size and time frame for your next A/B test.

A/B Test Sample Size Calculation Formula

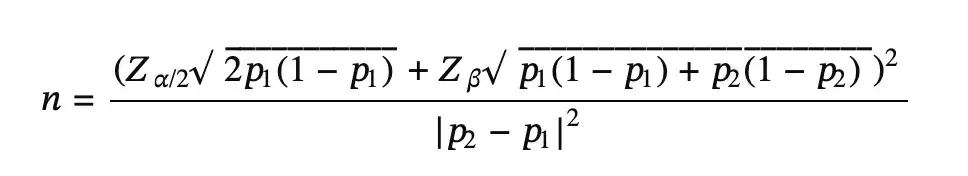

When I first saw the A/B test sample size formula, I was taken aback. Here’s how it looks:

– n is the sample size

– 𝑝1 is the Baseline Conversion Rate

– 𝑝2 is the conversion rate lifted by Absolute “Minimum Detectable Effect”, which means 𝑝1+Absolute Minimum Detectable Effect

– 𝑍𝛼/2 means Z Score from the z table that corresponds to 𝛼/2 (e.g., 1.96 for a 95% confidence interval)

– 𝑍𝛽 means Z Score from the z table that corresponds to 𝛽 (e.g., 0.84 for 80% power)

Pretty complicated formula, right? Luckily, there are tools that let us plug in as few as three numbers to get our results, and I will cover them in this guide.

A/B Testing Sample Size Calculation and Timing

In theory, to conduct a perfect A/B test and determine a winner between Variation A and Variation B, you need to wait until you have enough results to see if there is a statistically significant difference between the two. Many A/B test experiments prove this is true.

Depending on your company, sample size, and how you execute the A/B test, getting statistically significant results could happen in hours, days, or weeks — and you have to stick it out until you get those results.

For many A/B tests, waiting is no problem. Testing headline copy on a landing page? It’s cool to wait a month for results. The same goes with blog CTA creative — you’d be going for the long-term lead generation play, anyway.

But certain aspects of marketing demand shorter timelines with A/B testing. Take email as an example. With email, waiting for an A/B test to conclude can be problematic for several practical reasons.

Each Email Send Has a Finite Audience

Unlike a landing page (where you can continue to gather new audience members over time), once you run an email A/B test, that’s it — you can’t “add” more people to that A/B test. So you’ve got to figure out how to squeeze the most juice out of your emails.

This usually requires you to send an A/B test to the smallest portion of your list needed to get statistically significant results, pick a winner, and send the winning variation to the rest of the list.

Running an Email Marketing Program Means Juggling Multiple Sends

If you spend too much time collecting results, you could miss out on sending your next email — which could have worse effects than if you sent a non-statistically significant winner email to one segment of your database.

Email Sends Need to Be Timely

Your marketing emails are optimised to deliver at a certain time of day. They might be supporting the timing of a new campaign launch and/or landing in your recipient’s inboxes at a time they’d love to receive it.

So if you wait for your email to be fully statistically significant, you might miss out on being timely and relevant — which could defeat the purpose of sending the emails in the first place.

That’s why email A/B testing programs have a “timing” setting built-in: At the end of that time frame, if neither result is statistically significant, one variation